Table of contents

Today we will talk about AWS's most widely used storage service, the Amazon Simple Storage Service, commonly known as Amazon S3. As a Site Reliability Engineer, I extensively use the s3 service for day-to-day operations such as Data Transfer, Log storage, Disaster Recover (Client's database & documents) and fulfilling developer's requests. Working on Linux servers AWS S3 CLI commands saves a lot of time and make the work more efficient. Here are some commands that can make the job easier:

PREREQUISITES

Make sure to attach the correct S3 policy, and configure the AWS CLI with valid access keys on the ec2 instance and local. For this activity, I am using the AmazonS3FullAccess policy for my IAM user.

COMMANDS

- Creating the s3 bucket with the name "backupdocsdivyam"

aws s3 mb s3://{bucketname}/

[ec2-user@ip-172-31-5-124 ~]$ aws s3 mb s3://backupdocsdivyam/

make_bucket: backupdocsdivyam

[ec2-user@ip-172-31-5-124 ~]$

Uploading a file to an S3 bucket

- Here I am uploading a file "building_secure_and_reliable_systems.pdf" to my s3 bucket

aws s3 cp building_secure_and_reliable_systems.pdf s3://backupdocsdivyam/

upload: ./building_secure_and_reliable_systems.pdf to s3://backupdocsdivyam/building_secure_and_reliable_systems.pdf

divyam_sharma@DESKTOP-UDAPQVH:/mnt/c/Users/HP/Desktop/SRE$

Document uploaded!

Sync Documents to the s3 bucket

Sync is a powerful command! The s3 sync command synchronizes the contents of a bucket and a directory or the contents of two buckets.

Here I want to create a folder named AWS in my bucket and sync the folder AWS present in my local to the bucket

aws s3 sync /path/to/directory/ s3://{bucketname}/{folder}/

aws s3 sync /mnt/c/Users/HP/Desktop/SRE/AWS s3://backupdocsdivyam/AWS/

Folder created and Documents synced!

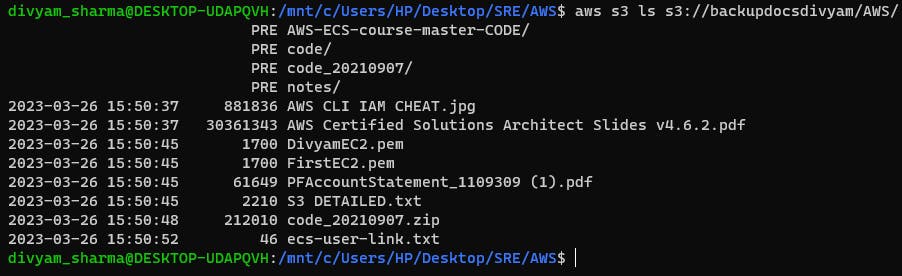

Listing the objects of the S3 bucket

- Notice that the files are being displayed with the respective modified dates.

aws s3 ls s3://{bucket_name}

Searching for an object in the bucket

- Here I want to search for a file containing "pem" in its name, other commands are also there to search for the file according to date modified

aws s3 ls --recursive s3://{path_to_search}/ | grep -i "file_keyword"

I have shared some useful AWS S3 CLI commands that can simplify your day-to-day operations and save time. With these commands, you can create a new bucket, upload files, sync directories, list objects, and search for specific files in the S3 bucket.

In the next blog, I will share some more commands that can help you efficiently manage your data and ensure reliable disaster recovery. Incorporating these commands into your workflow can make you more productive as an SRE and help you deliver better results to your organization.